Foundation Models are like base models. Let us easily understand it with the building analogy.

When constructing a building, first a basic structure is built and on that basic structure, the architect builds the designs. These basic structures remain similar. As for a residential apartment or house the basic structure will be that of bedrooms, kitchens, and all. For commercial buildings, the structure is different than residential.

Similarly, the foundation models are the basic structures that are fine-tuned to industry-specific goals to get the optimum results.

What are Foundation Models?

A foundation model is a kind of machine learning model that needs a lot of processing power because it is trained on a large dataset. During this first training period, these models are distinguished by their capacity to pick up a broad range of tasks and abilities. Foundation models are more adaptable and may be adjusted and refined for a wide range of applications, in contrast to typical AI models that are frequently created and trained for specific purposes.

Foundation Models in one line -

They are large deep-learning neural networks that are fed large amounts of supervised data.

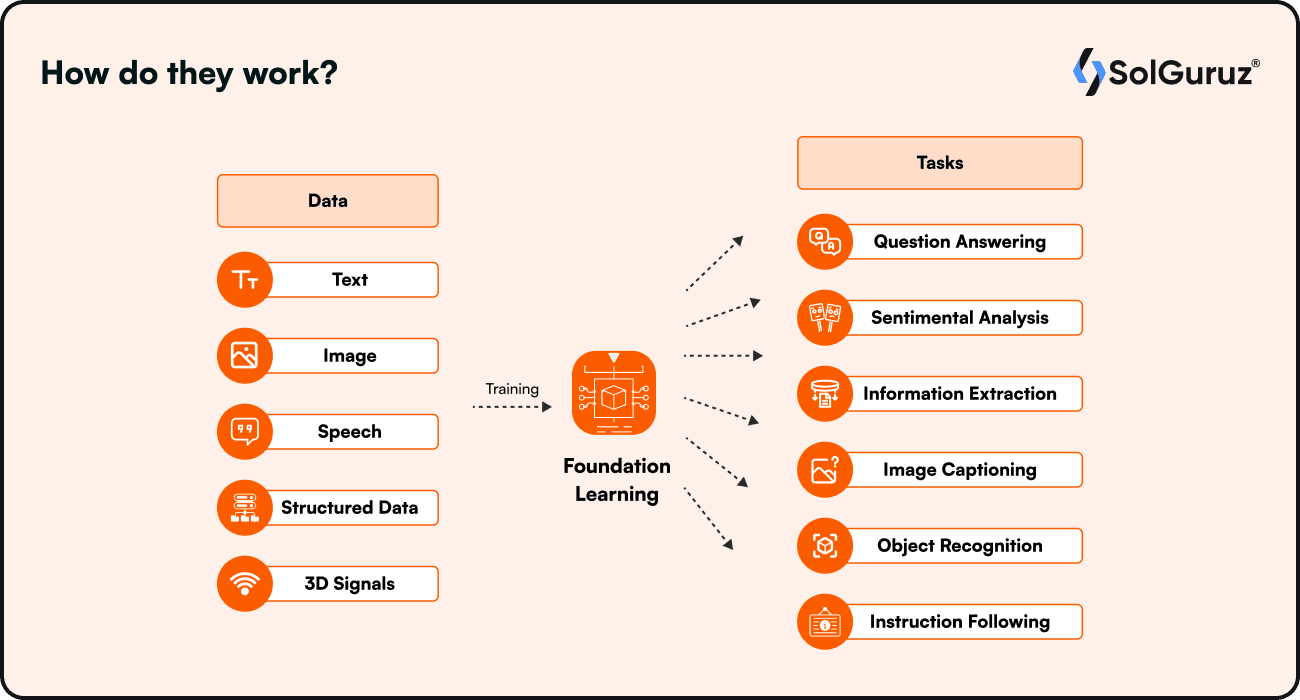

How Do They Work?

Foundation Models are represented by one type of generative artificial intelligence (generative AI). From one or more inputs (prompts), they produce instructions in human language. The foundation of the models is complex neural networks, such as transformers, variational encoders, and generative adversarial networks (GANs).

Even though every kind of network operates differently, they are all based on similar concepts. Generally speaking, an FM predicts the following item in a series using previously learned patterns and correlations. When using picture generation, for instance, the model examines the original image and produces a sharper, better-defined version of it. Similar to this, when it comes to text, the model predicts the following word based on the words that have come before it and its context. Using probability distribution techniques, the model predicts the next word.

Foundation models use self-supervised data to generate labels from input data. This indicates that no one has used labeled training data sets to instruct or train the model. Compared to earlier ML systems that made use of supervised or unsupervised learning, LLMs have this property.

Importance of Foundation Models

Artificial intelligence has advanced dramatically with the introduction of foundation models, which provide numerous advantages in a variety of fields.

Adaptability and Versatility

The adaptability of foundation models is one of its main advantages. These models can be tailored to carry out a variety of tasks, so they are not limited to just one. This is especially true for models like the object detection foundation models, which are adaptable and can be customized.

Effectiveness in Acquiring Knowledge and Information

The capacity of foundation models to effectively learn from big datasets is another area of strength. They have received extensive training, which helps them to have a thorough knowledge of the intricate correlations and patterns found in the data.

Improved Performance and Accuracy

The improved accuracy and performance of foundation models are a result of their size and depth. For applications like medical imaging or security monitoring, where accuracy is critical, foundation models with vision prove to be very beneficial.

Reduced Cost and Development Time

AI application development can be completed much more quickly and cheaply by using foundation models. Instead of beginning from scratch, developers and researchers can build pre-trained models because these models offer a solid foundation.

How are LLMs Different from Foundation Models?

The revolutionary new technologies that surround foundation models are already having an effect on our day-to-day activities. There is a difference between big language models and foundation models, even though they are occasionally used synonymously. Large deep learning models and foundation models, as previously mentioned, have been pre-trained on enormous datasets and customized for a variety of downstream purposes. A subset of foundation models known as Large Language Models (LLMs) are capable of handling a wide range of natural language processing (NLP) tasks. A wide range of text-based tasks, including comprehending context, responding to inquiries, composing essays, summarizing documents, and developing code, can be completed by LLMs.

Drawbacks of Foundation Models

It is established that foundation models respond to training data. However, they can respond to cues on the topics they were not trained on. However, they have some shortcomings. The following are some of the difficulties that foundation models face:

Infrastructure Needs

It is costly and time-consuming to create a foundation model from scratch, and training could take several months.

Development of the Front-end

Developers must incorporate foundation models into a software stack that includes pipeline engineering, rapid engineering, and fine-tuning tools to create useful applications.

Inability to Understand

While foundation models can offer factually and grammatically correct replies, they struggle to understand the prompt's context. Furthermore, they lack psychological and social awareness.

Untrustworthy Responses

Responses to inquiries regarding specific topics may not always be trustworthy and occasionally be unsuitable, harmful, or inaccurate.

Types of Foundation Models

There are many different types of foundation models, each with special qualities and uses. The following are a few noteworthy foundation model types:

1) Foundation Models with NLP

One of the most common types of foundation models is the language model, such as OpenAI's GPT series. They are trained on huge text corpora and can understand and generate human-like language. These models perform exceptionally well in tasks like question answering, summarization, and machine translation.

2) Foundation models with Vision

Language models concentrate on textual data, whereas vision models are more specialized in the creation and interpretation of images. Large-scale picture datasets are used to pre-train models like OpenAI's CLIP, which allows them to identify and classify visual information. Their applications include object detection, image classification, and even caption generation.

3) Multimodal Foundation Models

These models include language and vision functionalities. They are able to produce and process both visual and textual data. Tasks like picture captioning and visual question answering, which require both textual and visual inputs, are especially well-suited for these models.

4) Domain-Specific Models

Certain foundation models are designed for use in certain areas, including the legal, banking, or healthcare sectors. Due to their prior training on domain-specific data, these models comprehend and produce language appropriate for particular domains. For developers and academics working on specific applications, they offer a place to start.

Use Cases of Foundation Models

After exploring the multiple facets of foundation models, it is safe to say they have broad applications and uses. Foundation models find their uses in different areas like-

Language Models

GPT-3 and other language models are the epitome of how foundation models can comprehend and produce human language. With billions of parameters in its comprehensive architecture, GPT-3 is trained on a large corpus of textual data. The model can now handle a wide range of linguistic activities, including creative writing and translation, thanks to this training. The efficacy of artificial intelligence models in language processing is demonstrated by their capacity to comprehend context and produce logical, contextually relevant text. These models have applications far beyond text generation; they are being used in customer service (chatbots), content development, and even programming support.

Vision or Image Models

OpenAI's DALL-E demonstrates how creative foundation models may be applied to visual inventiveness. DALL-E is well-known for its capacity to produce original graphics from written descriptions. It takes expressions such as "an image is worth 16x16 words" literally, demonstrating its inventive notion blending abilities. By using both text and image data during training, the model is able to comprehend and provide intricate descriptions. Such a model has a wide range of applications, from helping with visual education tools to graphic design and art production. DALL-E is a major advancement in computer vision use cases, demonstrating the ability of vision models to synthesize and analyze visual content.

Multimodal Models

A subset of foundation models known as multimodal models combines text, image, and audio input with other data sources to carry out intricate tasks. By utilizing the synergies between various kinds of data, these models offer a more thorough comprehension of complicated inputs. For example, textual descriptions can be used in conjunction with visual data in an object detection foundation model that leverages multimodal data to improve accuracy and contextual comprehension. Applications like virtual assistants, which can interpret both voice commands and visual inputs, and advanced analytics, where insights are derived from varied data sources, benefit greatly from the integration of multiple data types in AI models.

Conclusion

With the introduction of foundation models, artificial intelligence has entered a new era characterized by unheard-of versatility and transformational potential. As we've seen, these models offer a basic structure for building a wide range of artificial intelligence applications, such as natural language processing and computer vision. Their accuracy, efficiency, and size have allowed for incredible advances in a variety of industries, raising the bar for performance. However, like with any technology, advancement in this field must continue to be guided by ethical, transparent, and responsible use issues.

Looking for an AI Development Partner?

SolGuruz helps you build reliable, production-ready AI solutions - from LLM apps and AI agents to end-to-end AI product development.

Strict NDA

Trusted by Startups & Enterprises Worldwide

Flexible Engagement Models

1 Week Risk-Free Trial

Give us a call now!

+1 (724) 577-7737

Next-Gen AI Development Services

As a leading AI development agency, we build intelligent, scalable solutions - from LLM apps to AI agents and automation workflows. Our AI development services help modern businesses upgrade their products, streamline operations, and launch powerful AI-driven experiences faster.

Why SolGuruz Is the #1 AI Development Company?

Most teams can build AI features. We build AI that moves your business forward.

As a trusted AI development agency, we don’t just offer AI software development services. We combine strategy, engineering, and product thinking to deliver solutions that are practical, scalable, and aligned with real business outcomes - not just hype.

Why Global Brands Choose SolGuruz as Their AI Development Company:

Business - First Approach

We always begin by understanding what you're really trying to achieve, like automating any mundane task, improving decision-making processes, or personalizing user experiences. Whatever it is, we will make sure to build an AI solution that strictly meets your business goals and not just any latest technology.

Custom AI Development (No Templates, No Generic Models)

Every business is unique, and so is its workflow, data, and challenges. That's why we don't believe in using templates or ready-made models. Instead, what we do is design your AI solution from scratch, specifically for your needs, so that you get exactly what works for your business.

Fast Delivery With Proven Engineering Processes

We know your time matters. That's why we follow a solid, well-tested delivery process. Our developers move fast and stay flexible to make changes. Moreover, we always keep you posted at every step of the AI software development process.

Senior AI Engineers & Product Experts

When you work with us, you're teaming up with experienced AI engineers, data scientists, and designers who've delivered real results across industries. And they are not just technically strong but actually know how to turn complex ideas into working products that are clean, efficient, and user-friendly.

Transparent, Reliable, and Easy Collaboration

From day one, we keep clear expectations on timelines, take feedback positively, and share regular check-ins. So that you'll always know how we are progressing and how it's going.

Have an AI idea? Let’s build your next-gen digital solution together.

Whether you’re modernizing a legacy system or launching a new AI-powered product, our AI engineers and product team help you design, develop, and deploy solutions that deliver real business value.