Engineering Quality Solutions

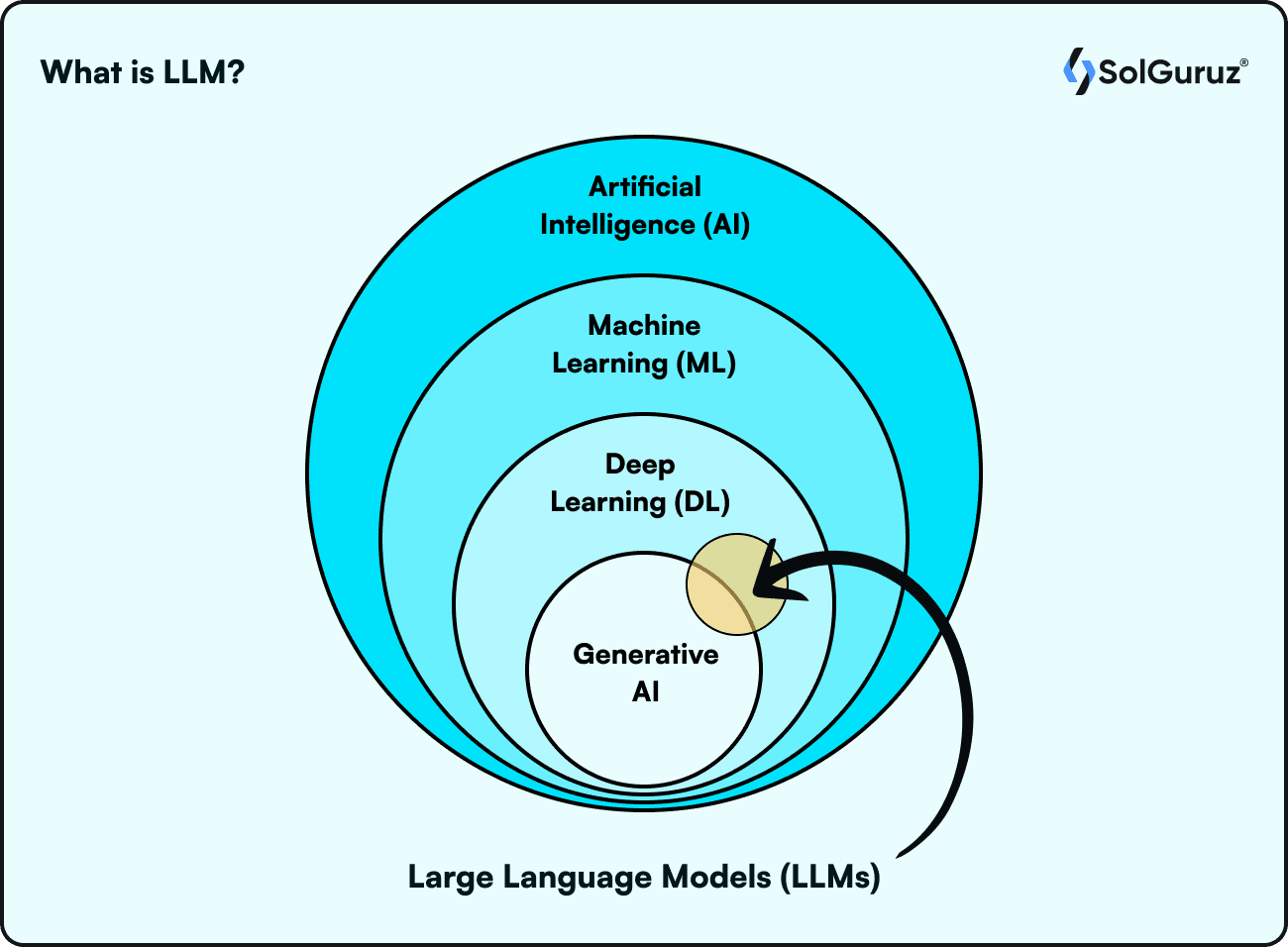

A large language model (LLM) is an artificial intelligence (AI) program that can recognize and produce text. It is at the intersection of Machine Learning and Deep Learning.

A large language model (LLM) is an artificial intelligence (AI) program that can recognize and produce text. It is at the intersection of Machine Learning and Deep Learning.

These are neural networks trained on large datasets and a very large number of parameters. The name breaks into three parts –

Large refers to large datasets to be trained on and a large number of parameters.

Language refers to the natural language used.

Models refer to the foundation models. LLMs are typically transformer models with an encoder and decoder within them. For more details, please refer to our Generative AI section.

Deep learning is a subset of machine learning that LLMs use to learn how words, phrases, and characters work together. Through the probabilistic examination of unstructured data, deep learning eventually allows the model to identify differences between content without the need for human interaction.

After that, LLMs undergo additional training through tuning, which includes prompt-tuning or fine-tuning to the specific activity that the programmer wants them to perform, such as translating text across languages or interpreting questions and producing answers.

Generative AI generates original content like images, audio, video, or text on given proper prompts. However, LLMs are just a small part of Gen AI that corresponds to the text part. LLMs are trained to generate different kinds of text like poems, articles, essays, QnA, summarization, or translating from one language to another.

Essentially, an

LLM is – Data + Architecture + Training

To understand how LLMs work, we need to understand a few basics first.

An LLM's training procedure is significantly responsible for its effectiveness. In this stage:

Pre-training: To enable the model to understand the relationships and patterns among words, it is subjected to massive volumes of text data (from books, papers, and web pages) internet in general. The more data it is trained on, the more refined results it produces. It gets more adept at producing original content with time.

It gains the ability to predict a sentence's following word while doing this. If given a prompt, "The sky is ___,” for example, the model might predict "vast" or “blue.” The more trained it is, the better the output will be according to the context. The machine picks up language, world facts, reasoning skills, and even certain biases from billions of these predictions. The biases in the results come from the data the model is fed.

Fine-tuning: The model can be made to specialize in specific categories or knowledge domains by refining it on a smaller dataset following the first pre-training. This aids in coordinating the model's outputs with the intended results. For instance, if a model is fine-tuned with healthcare data it is more likely to produce relevant results than a non-fine-tuned model.

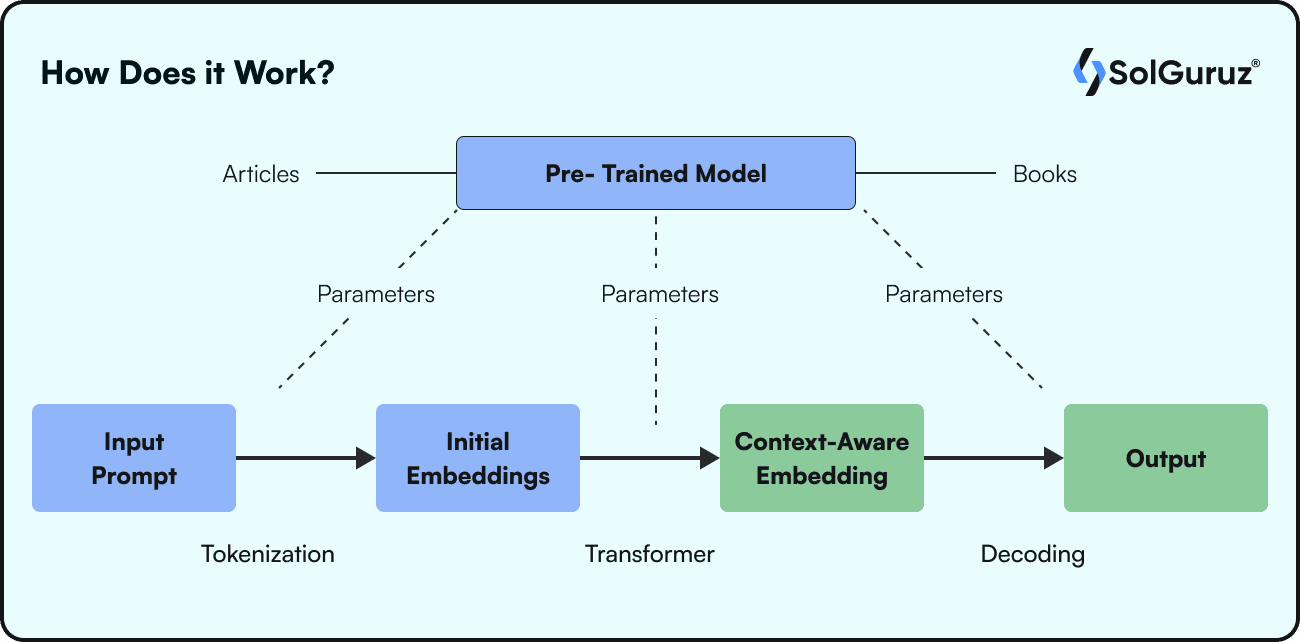

Once a question or any instruction (Input Prompt) is given to the LLM. The words are broken into tokens. Tokenization is the process of converting words into numerical values, depending on the training. This input prompt after tokenization is fed to the initial embeddings. Depending on the architecture, the output is then fed to the context-aware embeddings. In this case, the architecture is a “Transformer model,” meaning it takes an input, puts it through the encoder, then the decoder, and the result is generated.

The context-aware embeddings are in the form of numerical values. These numbers are again decoded to natural language to give human-understandable output.

All this time, the parameters from the pre-trained model are continuously fed at every stage to refine and process the input.

Large Language Models have great flexibility. One model is capable of carrying out a wide range of functions, including answering inquiries, summarizing papers, translating across languages, and finishing sentences. LLMs have the power to change how consumers use virtual assistants and search engines, as well as how information is created.

Even though they're not flawless, LLMs are showing a remarkable capacity for prediction based on a comparatively small set of cues or inputs. Using human language prompts as input, generative AI (artificial intelligence) can generate content using LLMs.

Based on how the LLMs are trained, they are classified into four categories.

Standard LLMs trained on generic data to yield results that are reasonably accurate for typical use situations are known as zero-shot models. These models can be used right away and don't require any further training.

Fine-tuned models improve upon the first zero-shot model's efficacy by undergoing extra training. One such is OpenAI Codex, which is widely used as an auto-completion programming tool for GPT-3-based applications. Another name for this is specialized LLMs.

Models for language representation make use of transformers, the structural cornerstone of generative artificial intelligence, and deep learning methods. These models enable the translation of languages into different media, including written text, and are well-suited for problems involving natural language processing.

These LLMs can process both text and images, which sets them apart from their predecessors, who were mainly made for text creation. As an illustration, consider GPT-4V, a more current multimodal version of the model that can produce and interpret content in several modalities.

The different challenges faced while working with LLMs can be -

Making sure the content they provide is correct and dependable is one of the main challenges. While LLMs are capable of producing writing that is factually incorrect or deceptive.

User-facing apps built on top of LLMs are as vulnerable to security flaws as any other program. Malicious inputs can also be used to alter LLMs such that they give forth responses that are safer or more morally dubious than others. Last but not least, one of the security issues with LLMs is that users may upload private, secure material into them to boost their own productivity. However, LLMs are not meant to be safe havens; in response to inquiries from other users, they may reveal private information because they use the inputs they get to train their models better.

However, the accuracy of the information provided by LLMs is only as good as the data they consume. They will respond to user inquiries with misleading information if they are given erroneous information. LLMs can also "hallucinate" occasionally, fabricating facts when they are unable to provide a precise response. For instance, ChatGPT was questioned about Tesla's most recent financial quarter by the 2022 news outlet Fast Company. Although ChatGPT responded with a comprehensible news piece, a large portion of the information was made up.

The immense potential of LLMs has multiple advantages.

LLMs are brilliant with words. They may also produce writing that is stylistically akin to that of a specific author or genre. If you train the LLM with Shakespeare and on prompting it will generate a Shakespeare-style poem to delight you.

LLMs are trained in different languages. They are helpful in language translation.

One method of addressing this shortcoming in LLMs is using conversational AI to converse with the model with a trustworthy data source, like a business website. This enables the generative properties of a large language model to generate a variety of helpful content for a virtual agent, such as training data and replies that are consistent with the brand identity.

AI systems that use natural language processing are better able to comprehend written and spoken language, similar to humans. Prior to LLM, businesses trained machines to comprehend human texts using a variety of machine-learning algorithms. However, the introduction of LLMs such as GPT-3.5 has simplified the procedure. It has improved the speed and accuracy with which AI-powered devices can now comprehend human texts. BARD and ChatGPT are the best instances of it.

Owing to the NLP technique, LLMs comprehend human language very well; they are ideal for labor-intensive or repetitive jobs. Professionals or organizations can reduce human effort by using LLMs to automate data processing and repetitive tasks. One of the reasons LLMs are so essential in businesses is that they may boost productivity by automating activities.

LLMs have different applications in various fields.

The ability to create language in response to cues by producing emails, blog posts, or other mid-to-long form content that can be polished and refined. RAG, or retrieval-augmented generation, is a prime example.

Condense lengthy articles, news items, research studies, company records, and even client biographies into comprehensive texts with lengths appropriate for the output format.

It helps developers create programs, detect bugs in the code, and even "translate" between different programming languages to locate security flaws.

Examine text to ascertain the tone of the consumer to comprehend large-scale customer feedback and support brand reputation management.

The advent of large language models capable of producing text and answering inquiries, such as ChatGPT, Claude 2, and Llama 2, presents intriguing future developments. Their ability to generate human-like text will be refined with time and training.

SolGuruz helps you reach your goals with custom tech solutions.

1 Week Risk-Free Trial

Strict NDA

Flexible Engagement Models

Give us a call now!

+1 (646) 703 7626

Make Your Existing Business 10X More Productive & Innovative

Introducing generative AI development services will benefit your business with super user engagement and satisfaction.