It is a combination of traditional information retrieval systems and generative AI LLMs. The strengths of both these models combine to make an effective system called RAG (Retrieval Augmented Generation). The extra knowledge in the RAG, all thanks to the knowledge base, produces more accurate, updated, and relevant results.

Why RAG

RAG comes in where the LLMs fail i.e. giving context. The amazing text generating capabilities of LLMs (Large Language Models) have taken text generation, image generation, or audio/video generation to another level. However, their failure to provide relevant context makes them less useful in real-world applications. RAG seeks to bridge the gap by providing a system that is very adept at deciphering user intent and providing insightful, context-aware responses.

RAG doesn’t require complete retraining rather, it can be fine-tuned with domain-specific knowledge. This makes it more cost, time, and resource effective.

Another important challenge with traditional LLMs is hallucination.

The LLM may generate outputs that are outdated if the training data is fixed or updated infrequently. Additionally, the training data might not contain information pertinent to a user's prompt, which could cause the LLM to produce entirely or partially erroneous output i.e. hallucination.

RAGs are useful in reducing the hallucination and providing precise answers to the relevant topics.

RAG Working

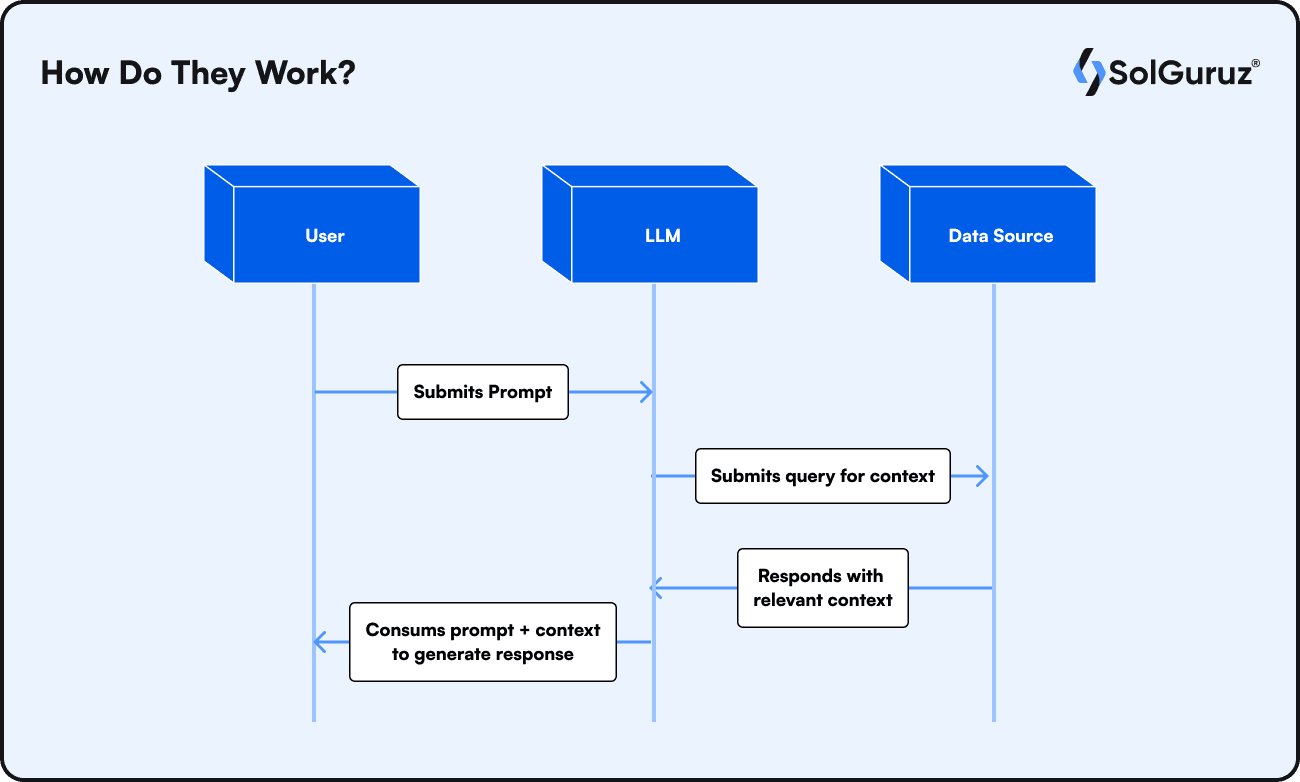

The RAG model works on giving the LLM the relevant or correct context for the user input.

A simple RAG working is shown in the above figure.

A user prompt is given to the LLM, which is then taken further by the retriever to the data source. The data source or knowledge base is pre-loaded with data. It can be databases, data management systems, or any data like policies, guides, etc.

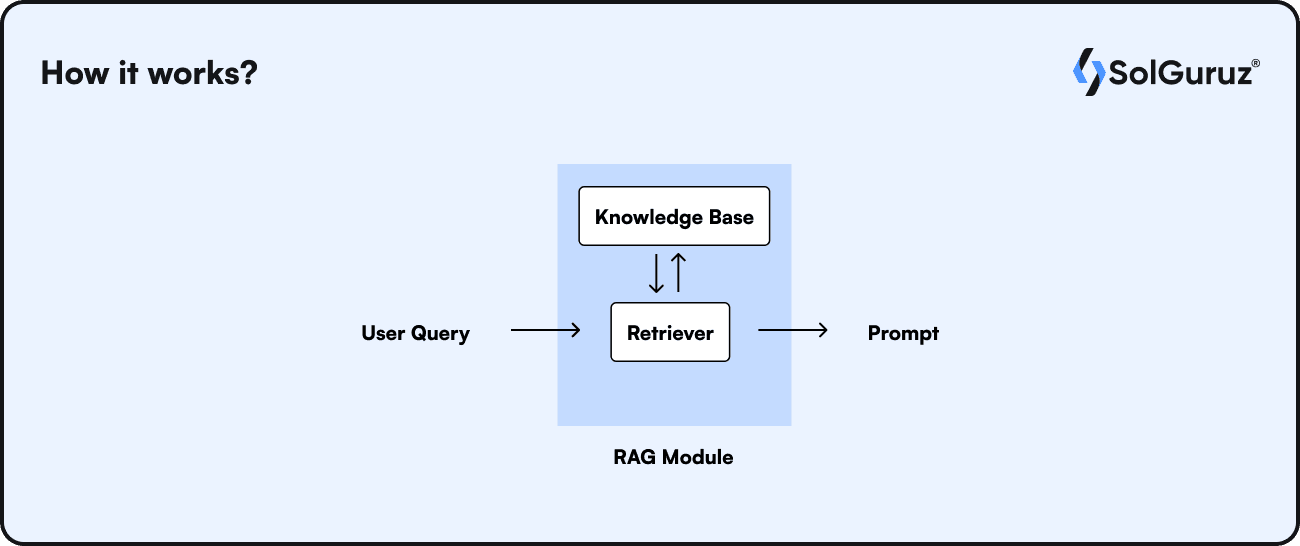

The two key elements of any RAG model –

- Retriever

- Knowledge base – has the information like sources, papers, or policies

Given an input and a source (such as Wikipedia), RAG retrieves a set of pertinent and supporting documents. The text generator produces the final result when the documents are fed in as context along with the original input prompt.

So a typical prompt reads like this – “Give me information on ….”

The LLM will show the information it knows depending on the data it is fed. The generated information may be relevant or may not be relevant. It is the humans who pick up meaningful and relevant information.

A question containing all of the pertinent data that was obtained in the preceding stage is then posed to the LLM. The question could say something like this:

The query posed by the user may be like this:

<insert query here>.

Please use the details below to answer the above question:

The prompt might alternatively read, "Please answer the question using the information below and nothing else you know." This is because the general purpose of RAG is to limit the LLM's response to the knowledge included in the pertinent material.

Benefits of Implementing RAG

When adopting RAGs, going forward the model is more advanced than traditional LLMs. While resolving many of the issues associated with big language models, retrieval augmented generation offers a number of advantages to businesses wishing to use generative AI models. Among them are:

1. Low false positives or hallucinations

With the power of a dedicated knowledge base RAGs aim to reduce the hallucinations. It also avoids erroneous outputs or misinformation.

2. Delivering correct and up-to-date responses

RAG makes sure that an LLM's response isn't based just on old and outdated training data. The response is updated and in context to the user query. The outputs are supported by proper citations making the answers more authentic.

3. Being economical and efficient

Enhancing traditional LLM learning can incur much cost than imagined. After all it is the neural networks we are talking about. RAG is comparatively more economical and easy to use.

4. Dynamic updating of information

Retraining a model on fresh data and updating its parameters are no longer necessary thanks to RAG. Rather, for the retrieval system to search and give the LLM up-to-date, pertinent information to produce replies, the external source is updated.

Need of RAG in Enterprises

Retrieval systems have become more popular as a result of the desire to go beyond LLM constraints and use them in real-world situations where timeliness and accuracy are crucial. When using pre-trained language models, we encounter the following problems:

- Challenges in expanding one's knowledge

- Outdated data

- Absence of references

- Possession of hallucinations

- Risk of sensitive, private information being leaked

RAG makes an effort to deal with these difficulties while utilizing language models. Next, let's examine how RAG carries this out.

Why Use RAG When You Can Finetune?

The use of RAG and fine-tuning has become essential for expanding an LLM's knowledge and skills past its initial training set. Their goal is to enrich a pre-trained language model with domain-specific knowledge; however, they vary in how they incorporate and use new information.

An organization's decision regarding whether to use RAG or adjust its model will rely on its goals and objectives.

When the primary objective of fine-tuning is to enable the language model to carry out a particular activity, such as an analysis of client sentiment on particular social media platforms, it works best. In certain situations, success can be achieved without a great deal of outside expertise or integration with a knowledge store of this kind.

Fine-tuning, however, is dependent on static data, which is problematic for use cases involving data sources that require frequent updates or modifications. From the perspective of cost, time, and resources, it is not possible to retrain an LLM such as ChatGPT on fresh data with every update.

When it comes to knowledge-intensive tasks that can benefit from obtaining current information from outside sources, such real-time market data or customer behavior data, RAG is a preferable option. These assignments could entail providing vague, open-ended answers or combining intricate, in-depth data to create summaries.

Use Cases of RAG

The various applications of RAG can be -

1) Chatbots

AI-powered chatbots are widely adopted in various industries. RAG-empowered chatbots or AI assistants can provide an extensive knowledge base.

2) Healthcare

The domain is widely benefitting from AI. RAG models are proving their usefulness as a valuable tool to doctors, and healthcare professionals.

3) Language Translation

language translation is another domain in which the RAG model comes in handy. This results in more accurate translations and more meaningful sentence structures.

4) Legal Research

Legal practitioners can perform effective legal research and speed up their document review procedures by implementing RAG models. RAG helps to improve accuracy and save time while summarizing case laws, and other legal writings.

Final Thoughts

Large Language Models offer businesses fascinating new possibilities. RAG's potential as a crucial strategy for enhancing NLP-based human-computer interactions. It can offer varied applications and benefits when used in places that can withstand the current RAG challenges.

Looking for an AI Development Partner?

SolGuruz helps you build reliable, production-ready AI solutions - from LLM apps and AI agents to end-to-end AI product development.

Strict NDA

Trusted by Startups & Enterprises Worldwide

Flexible Engagement Models

1 Week Risk-Free Trial

Give us a call now!

+1 (724) 577-7737

Next-Gen AI Development Services

As a leading AI development agency, we build intelligent, scalable solutions - from LLM apps to AI agents and automation workflows. Our AI development services help modern businesses upgrade their products, streamline operations, and launch powerful AI-driven experiences faster.

Why SolGuruz Is the #1 AI Development Company?

Most teams can build AI features. We build AI that moves your business forward.

As a trusted AI development agency, we don’t just offer AI software development services. We combine strategy, engineering, and product thinking to deliver solutions that are practical, scalable, and aligned with real business outcomes - not just hype.

Why Global Brands Choose SolGuruz as Their AI Development Company:

Business - First Approach

We always begin by understanding what you're really trying to achieve, like automating any mundane task, improving decision-making processes, or personalizing user experiences. Whatever it is, we will make sure to build an AI solution that strictly meets your business goals and not just any latest technology.

Custom AI Development (No Templates, No Generic Models)

Every business is unique, and so is its workflow, data, and challenges. That's why we don't believe in using templates or ready-made models. Instead, what we do is design your AI solution from scratch, specifically for your needs, so that you get exactly what works for your business.

Fast Delivery With Proven Engineering Processes

We know your time matters. That's why we follow a solid, well-tested delivery process. Our developers move fast and stay flexible to make changes. Moreover, we always keep you posted at every step of the AI software development process.

Senior AI Engineers & Product Experts

When you work with us, you're teaming up with experienced AI engineers, data scientists, and designers who've delivered real results across industries. And they are not just technically strong but actually know how to turn complex ideas into working products that are clean, efficient, and user-friendly.

Transparent, Reliable, and Easy Collaboration

From day one, we keep clear expectations on timelines, take feedback positively, and share regular check-ins. So that you'll always know how we are progressing and how it's going.

Have an AI idea? Let’s build your next-gen digital solution together.

Whether you’re modernizing a legacy system or launching a new AI-powered product, our AI engineers and product team help you design, develop, and deploy solutions that deliver real business value.