Next in the series is “Prompt Engineering”. This article will enlighten you with all the knowledge needed to understand prompt engineering and prompts.

What is Prompt Engineering?

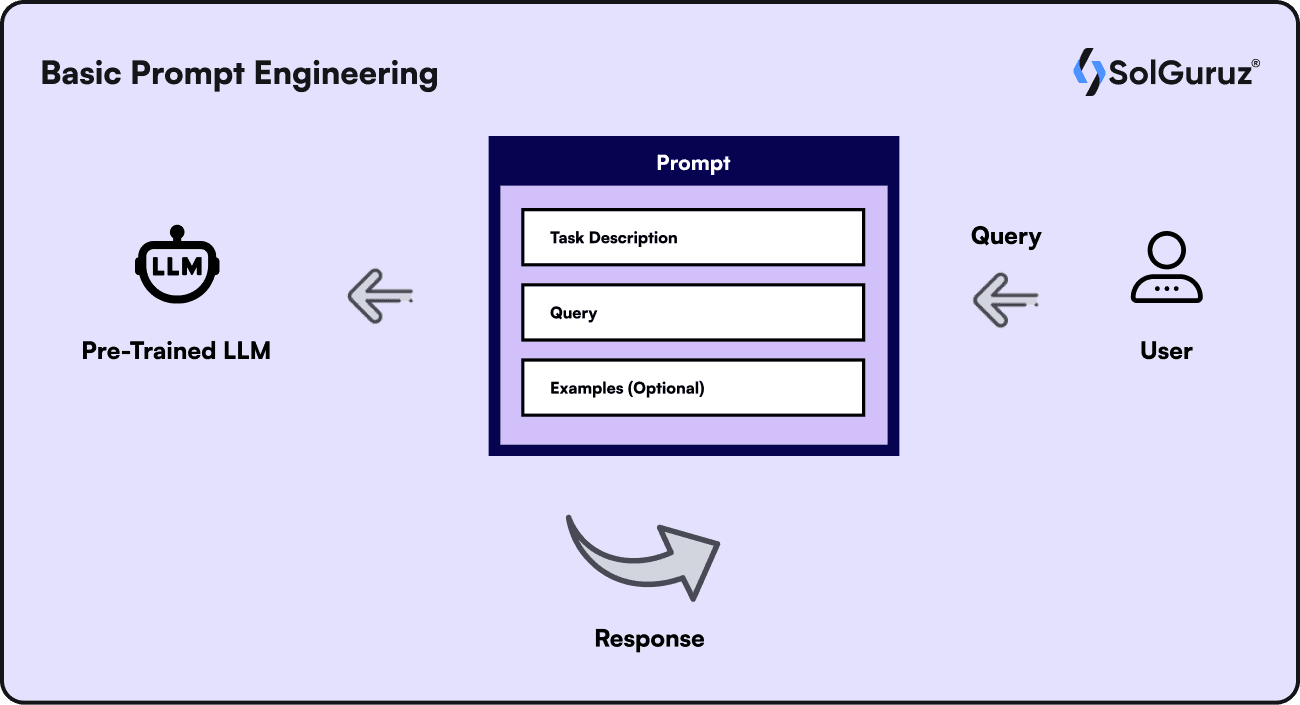

To complete a task, one can communicate with large language models (LLMs) through prompts. A prompt is input from the user that the model is supposed to react to. Prompts can be questions, instructions, or any other kind of input, depending on how the model is supposed to be used. The engineering involved in constructing appropriate prompts to communicate with the LLMs is Prompt Engineering.

It involves creating intuitive, sometimes simple, and sometimes complex prompts to get the desired result from the LLMs. In the case of Chat-GPT, a prompt may be any instruction to generate content. If you are working on Stable Diffusion, a text prompt will be used to generate an image.

In simple terms,

The language to communicate with the large language models LLMs is prompt engineering. The commands given to the LLMs are called Prompts. Generally, prompts are devising proper questions to ask the LLM to get the correct response and avoid hallucination(false response). We’ll cover hallucinations in later posts.

How Do Prompts Work?

Basically, LLMs are models that work on training. So, the output of LLM is entirely dependent on the training resources. LLMs are prone to hallucinations. Hallucinations are nothing but false responses or wrong answers. It is obvious if you need correct responses or avoid hallucinations, then you’d have to train the model with more dedicated resources or give the proper instructions. If you want domain-specific results, then domain-specific training is required.

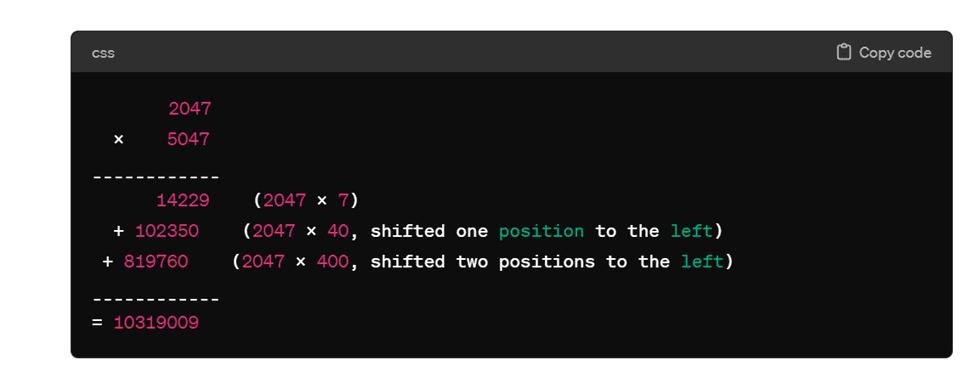

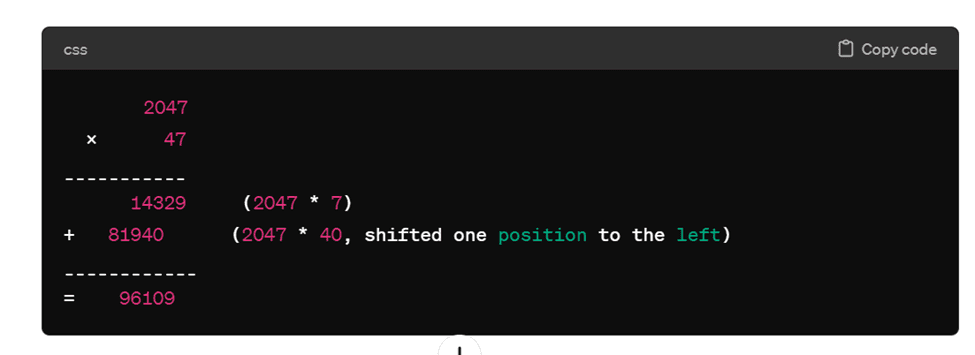

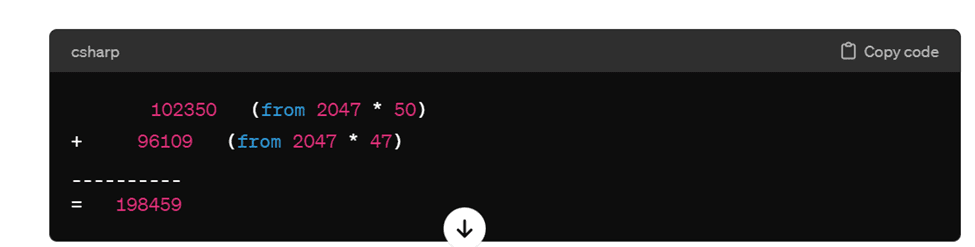

When devising prompts, remember that they are models trained with resources. So, if you want a correct answer, devise your question or instruction in a detailed or step-by-step manner. For instance, if you want to know the multiplication of 2047*5047, you will write in the LLM—Prompt—“What is the 2047*5047?”

The LLM generates the wrong answer.

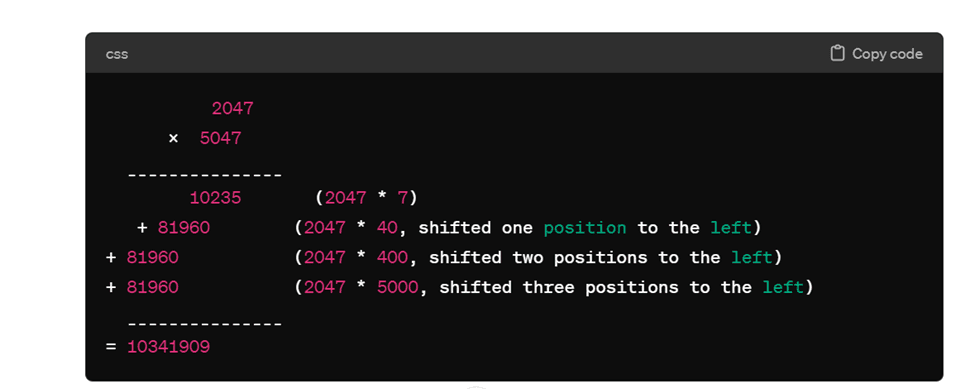

Next step – “Incorrect answer. Check Again. Give me the result step by step”

Again Wrong.

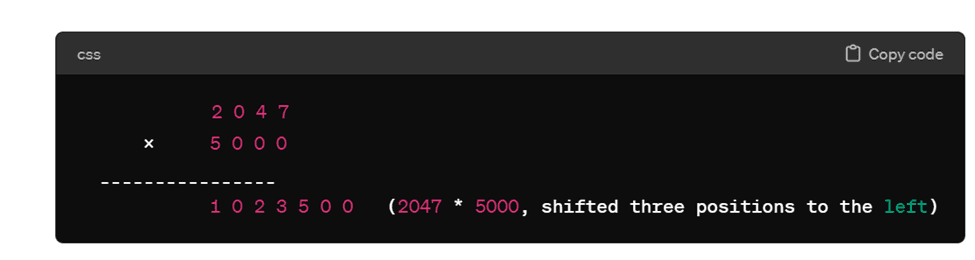

Next Prompt – “What is 2047*5000?”

Next Prompt – “What is 2047*47?”

Next Prompt – “Add the two results”

It took the wrong value. Hence, the result was wrong. Then, on giving the next prompt.

Next Prompt – “Add 10,235,000+96109”

This is the correct answer. Here is the correct answer to cross-check.

This example demonstrated the workings of prompts, where they can go wrong, where human intelligence is needed, and how correctly designed prompts can give the desired results.

The different types of prompts are -

Hard Prompts

Hard prompts are manual human-generated prompts. They act as a template that can be stored, re-used, shared, and re-programmed.

Soft Prompts

After hard prompts, the superior AI-generated prompts came into existence. These prompts were created during Prompt tuning. Soft prompts cannot be viewed or altered in text, in contrast to hard prompts. An embedding, or string of numbers that extracts information from the broader model makes up a prompt. More training data can be replaced with soft prompts.

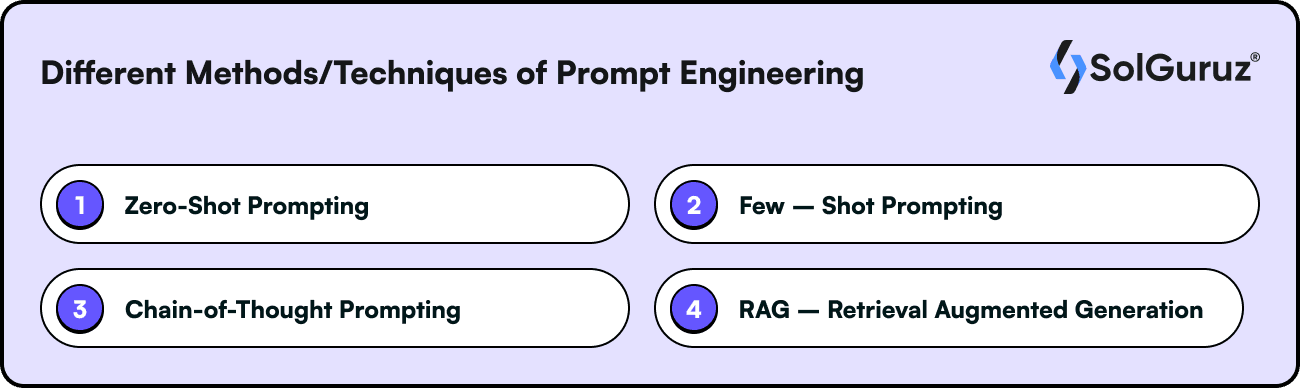

Different Methods/Techniques of Prompt Engineering

The different techniques are used to improve the model’s basic understanding and refined output.

Zero-Shot Prompting

The machine learning model is given a task that it hasn't been specifically trained on using zero-shot prompting. Zero-shot prompting evaluates the model's independence from previous examples and its capacity to generate pertinent outcomes.

Few – Shot Prompting

The model is given a few example outputs (shots) to assist it learn what the requestor wants it to do. This technique is known as few-shot prompting. If the learning model has context to work with, it can comprehend the intended output more effectively.

Chain-of-Thought Prompting

A sophisticated method called chain-of-thought prompting (CoT) gives the model a detailed justification to follow. A complex task can be simplified into intermediate steps, or "chains of reasoning," which improve the model's ability to grasp language and produce more accurate outputs.

RAG – Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is a potent technique that enhances the relevance and efficacy of LLMs by fusing prompt engineering with context retrieval using external data sources. Requiring the model to be grounded in extra data enables more precise and context-aware responses.

Prompt Tuning

Since we are learning about prompts in depth. Another concept in prompt engineering is – Prompt tuning

A parameter-efficient tuning method known as prompt tuning or P-tuning. Before using the LLM, P-tuning entails employing a tiny trainable model. The task-specific virtual tokens are generated, and the text prompt is encoded using the tiny model.

The LLM receives these virtual tokens, which are pre-appended to the prompt. Upon completion of the tuning process, the smaller model is replaced by these virtual tokens, which are kept in a lookup table and used during inference.

Fine-tuning has given beautiful results with various models and is undoubtedly a very effective technique. However, it requires a large number of resources, like time and money. It is not possible to have time and money all the time. Then comes in Prompt Tuning or P-tuning. It is a cost-efficient way of tuning the prompts to get the desired results.

Benefits of Prompts, Prompt Engineering, and Prompt Engineers

The capacity to generate optimum outputs with minimal post-generation effort is the main advantage of prompt engineering. The quality of generative AI outputs might vary; hence, expert practitioners are needed to review and edit. Prompt engineers guarantee that AI-generated output meets predetermined goals and standards by creating exact prompts, which minimizes the need for intensive post-processing.

Composing prompts for Google Bard is not the same as creating prompts for Open AI's GPT-3 or GPT-4. Bard can be told to incorporate more recent information into its results because it can obtain information via Google Search. However, since it was ChatGPT's main purpose upon construction, it is a superior tool for reading and summarizing content. AI models are guided to produce more relevant, accurate, and tailored responses via well-crafted prompts. Well-designed prompts improve the effectiveness and satisfaction of long-term interactions with AI since AI systems learn as and when they are used.

Innovative engineers operating in open-source settings are encouraging generative AI to accomplish amazing feats that were not necessarily part of its original design brief and are yielding some astonishing real-world outcomes. To engage human players in truly responsive storytelling and even to obtain new and accurate insights into the astronomical phenomenon of black holes, engineers are incorporating generative AI into games. For instance, researchers have created a new AI system that can translate language without needing to be trained on a parallel text. As generative AI systems develop larger and more complicated, prompt engineering will become progressively more important.

How to Write Effective Prompts

While writing or constructing intuitive prompts, it is advisable to keep a few points in mind. These are -

Clarity

You should be very explicit about what you want

the model to accomplish in the prompt. Stay clear of uncertainty. For example,

rather than "Tell me about dogs," choose "Provide a detailed

description of the characteristics, behavior, and care required for domestic dogs."

Definition

LLMs react in accordance with the prompt's immediate context. Thus, it is essential to create a clear background. For instance, there is clear context and instructions provided by the question, "Translate the following English text to French: 'Hello, how are you?'" will give you the desired result.

Precision

Framing clear and precise questions. If you want to know the wonders of the world, frame a prompt like “Tell me the modern seven wonders of the world.”Precise prompts will give you the desired results from the language model.

Prompt Engineering Use cases

Prompt Engineering finds its use among various verticals and industries.

In Text Generation

Prompts become useful while generating different kinds of texts. Many well-known tools like GPT or Gemini take prompts to generate text. These texts can be in the form of blog generation, messages, poems, and essays. They even summarize long content into short forms. Even the question-and-answer format is a greatly used text generation method.

In Image Generation and Audio Video Generation

A game-changing scenario occurred in the image and audio-video industry with the advent of AI and prompt. Tools like DallE, Stable Diffusion, and others work on text-to-image generation. Given a prompt in textual format, these tools will generate the relevant images. Creating AI avatars for different purposes is the new trend. AI, with correct prompts, has created photorealistic images that are difficult to distinguish.

In Audio Video formats, the text-to-audio or text-to-video generation has revolutionized the industry. Tools like Jukebox can generate audio tunes on textual prompts. Another viral trend nowadays is AI voiceover. The narrative of any video or character in animation is easily possible with the help of prompt engineering and AI.

AI-generated videos are increasingly taking over the internet. With the help of prompt engineering, AI is creating the proper motions required in videos. Video editing is another domain where increasingly AI is editing videos with correct prompts and instructions.

In Software Development

AI has altered software development with code generation and testing. With simple prompts, it can generate meticulous coding within a few seconds. Prompting plays a vital role in coding, as any mistake in the prompt will generate a wrong code, which will then be reflected in the debugging or testing.

Conclusion

Prompts and prompt engineering are shaping the future of AI and other industries. Accuracy gains, greater inventiveness, and productivity gains are just a few benefits for individuals who take the time to learn and put prompt engineering principles into practice. Gaining proficiency in this area makes generative AI technologies useful partners that support users in achieving their objectives and boosting their efforts.

Using AI with human intelligence can pave your path to success. Gain knowledge about AI and lead the way.

Looking for an AI Development Partner?

SolGuruz helps you build reliable, production-ready AI solutions - from LLM apps and AI agents to end-to-end AI product development.

Strict NDA

Trusted by Startups & Enterprises Worldwide

Flexible Engagement Models

1 Week Risk-Free Trial

Give us a call now!

+1 (724) 577-7737

Next-Gen AI Development Services

As a leading AI development agency, we build intelligent, scalable solutions - from LLM apps to AI agents and automation workflows. Our AI development services help modern businesses upgrade their products, streamline operations, and launch powerful AI-driven experiences faster.

Why SolGuruz Is the #1 AI Development Company?

Most teams can build AI features. We build AI that moves your business forward.

As a trusted AI development agency, we don’t just offer AI software development services. We combine strategy, engineering, and product thinking to deliver solutions that are practical, scalable, and aligned with real business outcomes - not just hype.

Why Global Brands Choose SolGuruz as Their AI Development Company:

Business - First Approach

We always begin by understanding what you're really trying to achieve, like automating any mundane task, improving decision-making processes, or personalizing user experiences. Whatever it is, we will make sure to build an AI solution that strictly meets your business goals and not just any latest technology.

Custom AI Development (No Templates, No Generic Models)

Every business is unique, and so is its workflow, data, and challenges. That's why we don't believe in using templates or ready-made models. Instead, what we do is design your AI solution from scratch, specifically for your needs, so that you get exactly what works for your business.

Fast Delivery With Proven Engineering Processes

We know your time matters. That's why we follow a solid, well-tested delivery process. Our developers move fast and stay flexible to make changes. Moreover, we always keep you posted at every step of the AI software development process.

Senior AI Engineers & Product Experts

When you work with us, you're teaming up with experienced AI engineers, data scientists, and designers who've delivered real results across industries. And they are not just technically strong but actually know how to turn complex ideas into working products that are clean, efficient, and user-friendly.

Transparent, Reliable, and Easy Collaboration

From day one, we keep clear expectations on timelines, take feedback positively, and share regular check-ins. So that you'll always know how we are progressing and how it's going.

Have an AI idea? Let’s build your next-gen digital solution together.

Whether you’re modernizing a legacy system or launching a new AI-powered product, our AI engineers and product team help you design, develop, and deploy solutions that deliver real business value.