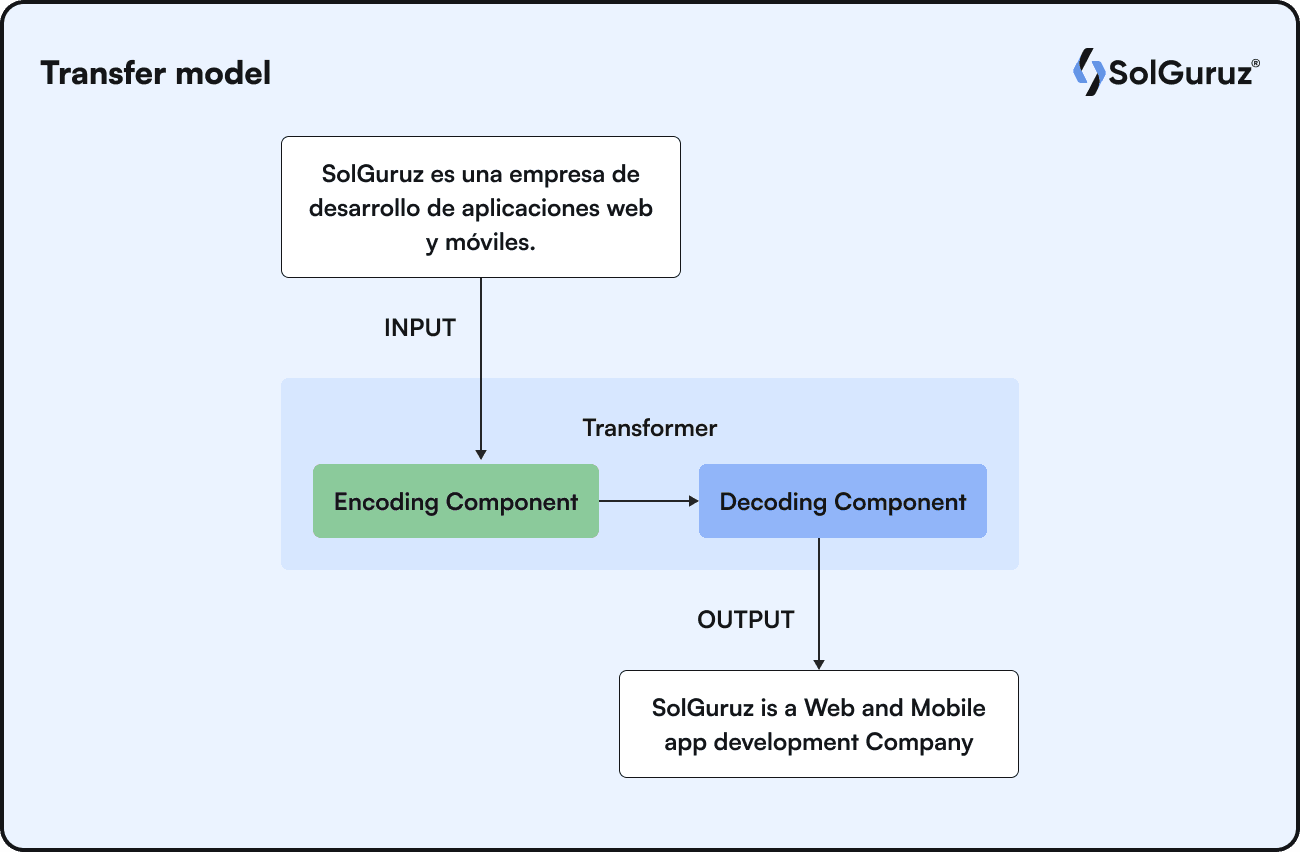

This is a simple representation of a transformer-based data model.

This is a simple representation of a transformer-based data model.

Generative AI, a subset of artificial intelligence, focuses on creating new content, like text, images, data, or outputs. Unlike standard AI systems, which rely on predetermined rules and data sets, generative AI models can produce novel outputs based on the patterns and data they've been trained on.

The core model of generative AI, a large language model (LLM), or simply language model, is trained on enormous volumes of textual data and is skilled at producing output in natural language that resembles that of a human being by anticipating the words that will appear in a sequence. Gen AI leverages LLM to generate a variety of unique outputs, such as essays or tales, or to respond to queries, depending on the input.

It is a more democratized version of AI that can be accessed with very simple text prompts to generate outputs. Natural language acts as input for the language models.

How Generative AI Works?

From the Technical Lens

The foundation of generative AI is using generative models, which take a dataset's underlying probability distribution and utilize it to create new samples that are similar to the original data. Generative Adversarial Networks (GANs), one of the most well-known generative AI systems, use two neural networks—the discriminator and the generator—playing a competitive game to generate realistic data samples.

Discriminatory AI creates classifications between inputs; generative AI does not. The objective of discriminatory AI is to evaluate incoming inputs according to its training set of knowledge. Conversely, the goal of generative AI models is to produce artificial data.

At the heart of Gen AI, gigantic amounts of neural networks work on various parameters to train and learn to choose the next correct word. These neural networks are fed with inputs and functions as various parameters. The output is generated based on the calculation of these functions and inputs. The generative AI model is then fine-tuned to give precise outputs.

Fine-tuning involves training the pre-trained machine learning model for a dedicated purpose. It is training the machine learning model with a focused data set. For instance, if AI is going to be used by the healthcare sector, fine-tuning involves training the ML model with healthcare datasets, such as patient records, patient management, nursing, and other things.

How Can Businesses Use Generative AI?

This technology has enormous potential in various industries, whether it is creative professions like art and design or practical uses such as content development, product design, and more. Generative AI enables the development of realistic images, videos, text, and even music, bringing up a world of possibilities for organizations eager to innovate and stand out in the market.

Use Cases of Gen AI

The listed use cases of Generative AI talk about generic categories like language, audio, visual, and other mediums.

Language

When we say language models, ‘language’ becomes the primary medium. Large language models (LLMs) are popular examples of generative models based on language. Many activities, like essay writing, code writing, text translation, and decrypting the texts.

Audio

The rapidly developing genre within the field of generative AI is music or audio. Certain generative models can recognize objects in movies, create sound effects for them, and use text inputs to create creative tunes and short audio clips.

Visual

Generative AI is most widely used in the visuals. This includes creating three-dimensional graphs, videos, avatars, and other kinds of illustrations. Images can be produced using a range of artistic approaches, and visuals can be produced by editing and alteration procedures. Generative AI models can do much more than just create realistic images for virtual or augmented reality and 3D models for video games.

Synthetic Data

Synthetic data can be a very useful technique for training AI models when there is a lack of data, restricted data, or data that simply cannot handle corner cases. Using generative models to generate synthetic data is one of the best solutions for the data challenges that many businesses encounter. It encompasses all modalities and application cases and is enabled by a process called label-efficient learning.

Software Development

Generative AI has altered the realm of code writing and software development. We can write code much more quickly and precisely with the help of generative AI technologies like GitHub Copilot, ChatGPT, and AlphaCode.

Developers can benefit from generative AI technologies by getting code snippets, increasing the efficiency of software testing by finding more faults. Another benefit is providing the best answers to coding puzzles. Faster development cycles and better code quality are the end results, which eventually improve software products and user experiences.

Benefits of Gen AI

The most appealing benefit of generative AI for businesses is undoubtedly efficiency, as it frees up employees' time, energy, and resources to focus on more important strategic objectives by automating repetitive tasks. This could result in lower personnel costs, more operational efficiency, and knowledge of how well or poorly specific business processes are functioning.

Automating tasks and saving time is another benefit of implementing generative AI. It relieves humans to take up more complex and tedious tasks while executing the redundant tasks at hand.

Personalization and customization with generative AI have taken a new definition. Anything can be personalized with images or desired texts or designs. It helps save a lot of time and money.

Creativity is another feature that AI assists humans with. Generative AI solutions can assist creative professionals and content creators with research and editing, marketing, and whatnot.

Places Where AI Can Go Wrong?

AI brings with it many concerns that can diminish the benefits it provides. Out of many, the ethical concerns of AI hold a very grave danger around humanity.

Ethical Concerns

The misuse of AI is a big concern. Deepfakes generated by AI have caused and can cause much turbulence. Another ethical concern caused by AI tools available to the public is the potential risk of spreading misinformation. The wrong information generated by AI or deepfakes can create clashes among the public. We can say responsible AI or AI Ethics.

Bias

With wrong data training or insufficient data, the generative AI output can be biased. This incomplete or biased information can prove to be dangerous and can hamper further analysis. Preventing biases can also encourage the ethical use of AI.

Inaccurate Data

When the pre-training data is old or incomplete or not the latest, then the output generated by the AI is inaccurate.

One concern is AI overtaking humans. AI development is pacing progressively, but there is an underlying fear that AI will take up jobs or replace humans.

Gen AI Terminology Section

We’ll start with the most basic ones. We all have heard of ChatGPT. The most heard and known application of AI.

GPT - Generative Pre-trained Transformers.

Generative - New data is generated through the generative model.

Pre-Trained Data - With the parameters and training data, the language models are trained with training data, including a large corpus of internet data like Wikipedias, books, information, etc.

Transformers - Transformer models function by putting input data—which might be token sequences or other structured data—through a succession of layers that include feedforward neural networks and other techniques.

This is a simple representation of a transformer-based data model.

Training Data: The massive dataset used to train the generative AI model. The quality and quantity of training data significantly impact the model's outputs.

Generative Adversarial Networks (GANs) – The most common type of artificial intelligence system. It is made up of two neural networks namely the discriminator and the generator. These two neural networks have been trained in competition to produce realistic data samples.

Generator - The neural network component of a GAN responsible for generating new data samples. It takes random noise or input data as input and produces synthetic data that resembles the training data.

Discriminator - The job of a GAN's neural network component is to differentiate between actual data samples from the training set and the information samples produced by the generator.

Autoencoder - An encoder and a decoder network make up an autoencoder, a type of neural network design. It is applied to problems related to data reconstruction, feature learning, and dimensionality reduction.

Data Augmentation - the process of utilizing generative models to create additional data samples in order to artificially increase the size of a dataset. In machine learning, data augmentation is frequently used to train models with very little information.

Deepfake - Artificial intelligence techniques are used to create synthetic media, which includes audio, video, and image files that are frequently intended to trick or manipulate. Deepfakes have sparked worries about how generative AI technology can be abused to produce false or deceptive content.

It is well said by an economist in the World Economic Forum, “AI won’t take up jobs, but someone with the right knowledge of AI might.”

Looking for an AI Development Partner?

SolGuruz helps you build reliable, production-ready AI solutions - from LLM apps and AI agents to end-to-end AI product development.

Strict NDA

Trusted by Startups & Enterprises Worldwide

Flexible Engagement Models

1 Week Risk-Free Trial

Give us a call now!

+1 (724) 577-7737

Next-Gen AI Development Services

As a leading AI development agency, we build intelligent, scalable solutions - from LLM apps to AI agents and automation workflows. Our AI development services help modern businesses upgrade their products, streamline operations, and launch powerful AI-driven experiences faster.

Why SolGuruz Is the #1 AI Development Company?

Most teams can build AI features. We build AI that moves your business forward.

As a trusted AI development agency, we don’t just offer AI software development services. We combine strategy, engineering, and product thinking to deliver solutions that are practical, scalable, and aligned with real business outcomes - not just hype.

Why Global Brands Choose SolGuruz as Their AI Development Company:

Business - First Approach

We always begin by understanding what you're really trying to achieve, like automating any mundane task, improving decision-making processes, or personalizing user experiences. Whatever it is, we will make sure to build an AI solution that strictly meets your business goals and not just any latest technology.

Custom AI Development (No Templates, No Generic Models)

Every business is unique, and so is its workflow, data, and challenges. That's why we don't believe in using templates or ready-made models. Instead, what we do is design your AI solution from scratch, specifically for your needs, so that you get exactly what works for your business.

Fast Delivery With Proven Engineering Processes

We know your time matters. That's why we follow a solid, well-tested delivery process. Our developers move fast and stay flexible to make changes. Moreover, we always keep you posted at every step of the AI software development process.

Senior AI Engineers & Product Experts

When you work with us, you're teaming up with experienced AI engineers, data scientists, and designers who've delivered real results across industries. And they are not just technically strong but actually know how to turn complex ideas into working products that are clean, efficient, and user-friendly.

Transparent, Reliable, and Easy Collaboration

From day one, we keep clear expectations on timelines, take feedback positively, and share regular check-ins. So that you'll always know how we are progressing and how it's going.

Have an AI idea? Let’s build your next-gen digital solution together.

Whether you’re modernizing a legacy system or launching a new AI-powered product, our AI engineers and product team help you design, develop, and deploy solutions that deliver real business value.