AI Hallucinations Explained: Causes, Examples, and Solutions

AI hallucinations happen when a model confidently generates information that is incorrect or completely made up. This blog explains why these errors occur, real examples of hallucinations, and how developers can reduce them to build more reliable AI systems.

“Eat a small rock every day to supplement your mineral diet.”

No, if this is not to your taste, then

“Add ⅛ cup of non-toxic glue to your pizza to stick the cheese.”

The classic case of Google AI's hallucinating. This became viral last year, around May 2024.

Let’s read more in this section of our Gen AI Wiki series.

What are AI Hallucinations?

AI hallucinations are inaccurate or false outcomes produced by AI models.

A number of things, including biases in the data used to train the model, inadequate training data, or inaccurate assumptions made by the model, might result in these errors. For AI systems that are used to make critical judgments, like financial trading or medical diagnosis, hallucinations may be an issue.

In one line, AI hallucinations can be defined as “the negative or false responses the generative AI model gives.”

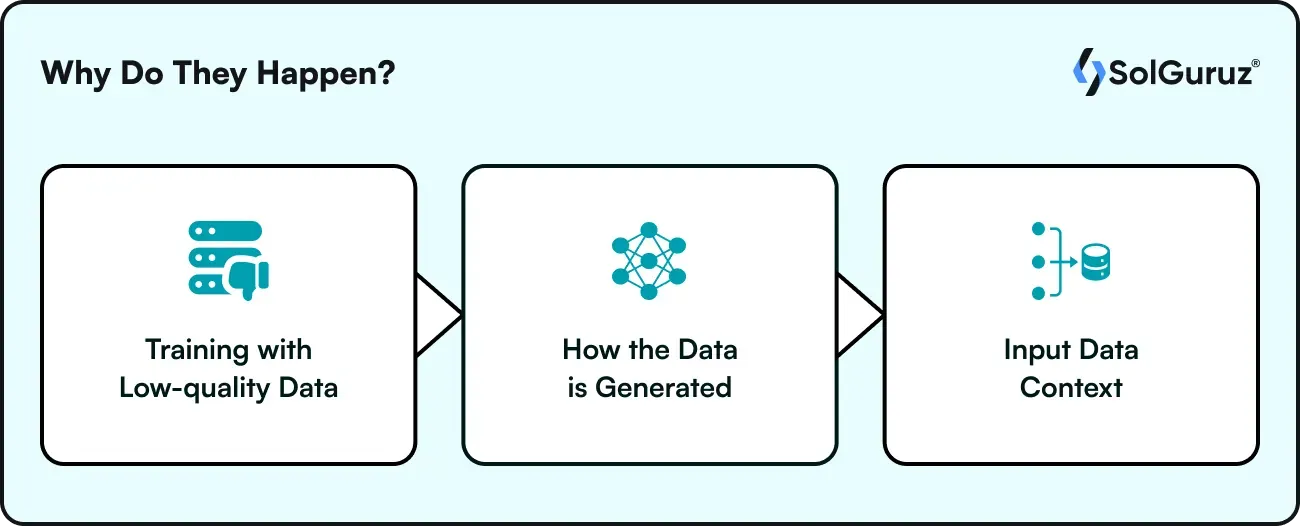

Why Do They Happen?

Here are some reasons why AI hallucinations happen -

Training with Low-quality Data

When the data used to train the LLM contains inaccurate, partial, or faulty information, hallucinations may result.

For LLMs to generate output that is accurate and relevant to the user who supplied the input prompt, a substantial amount of training data is necessary.

However, there may be biases, errors, noise, or inconsistencies in this training data; as a result, the LLM generates inaccurate and occasionally utterly nonsensical outputs.

How the Data is Generated

Even with a consistent and dependable data collection that includes high-quality training data, hallucinations may nevertheless arise as a result of the training and generation techniques employed.

For instance, the transformer may execute erroneous decoding or bias created by the model's earlier generations, both of which cause the system to hallucinate its response. Additionally, models may be biased toward certain or generic phrases, which could affect the data they produce or cause them to invent their response.

Input Data Context

Hallucinations may occur if the human user's input request is ambiguous, inconsistent, or contradictory. Users have control over the inputs they give the AI system, but they have no control over the caliber of training data or the techniques employed. They can improve the AI system's output by refining their inputs and giving it the appropriate context.

How Do They Occur?

By identifying patterns in the data, AI models are trained to generate predictions. However, the completeness and quality of the training data frequently determine how accurate these forecasts are.

Incomplete, skewed, or otherwise defective training data can cause the AI model to pick up incorrect patterns, which may result in false predictions or hallucinations.

An AI model trained on a dataset of medical photos, for instance, would be able to recognize cancer cells.

The AI model might, however, mistakenly assume that healthy tissue is malignant if the dataset contains no pictures of healthy tissue.

AI hallucinations can happen for a variety of reasons, including flawed training data. Inadequate grounding could also be a contributing issue.

Real-world knowledge, physical characteristics, or factual information may be difficult for an AI model to comprehend effectively.

Because of this lack of foundation, the model may produce results that appear believable but are, in fact, erroneous, irrelevant, or illogical. This can even go so far as to create links to nonexistent websites.

An example of this would be if an AI model were created to summarize news items and include material not originally included or even made-up content.

Developers working with AI models should be aware of these possible reasons for AI hallucinations.

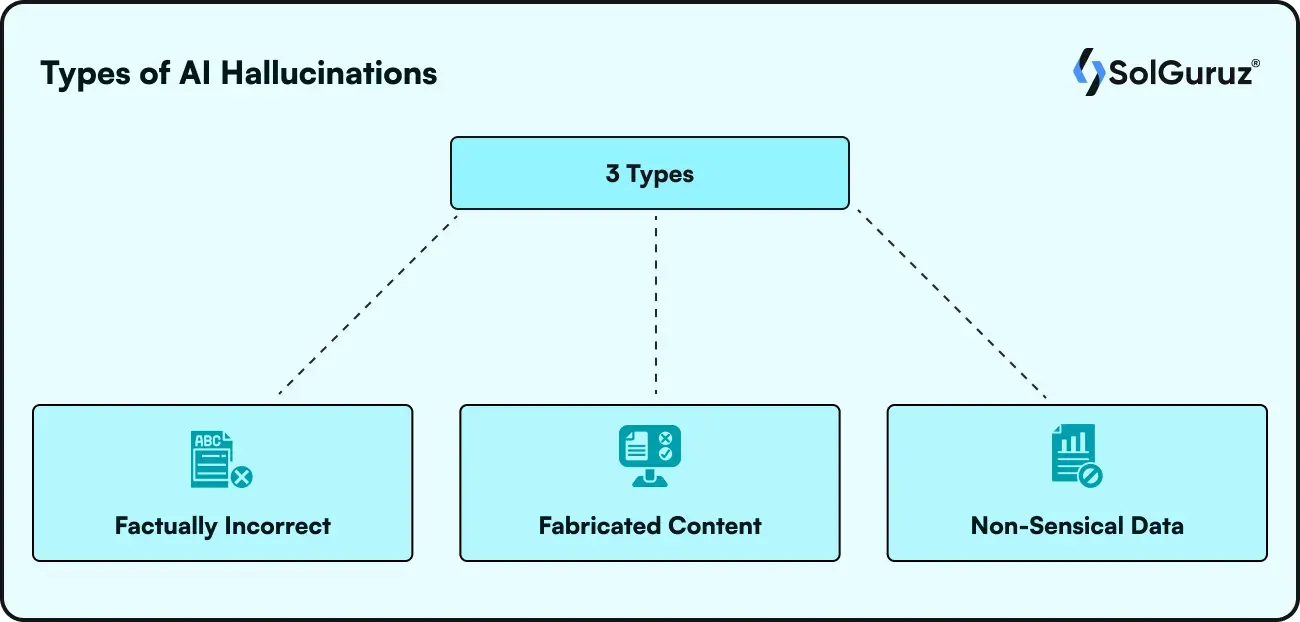

Types of AI Hallucination

A broad categorization of hallucination can be -

1. Factually Incorrect

When an AI model produces inaccurate data, such as historical or scientific misrepresentations, this is known as a factual error.

In mathematics, for instance, even well-developed models have struggled to maintain constant accuracy.

While newer models, even with improvements, still struggle with more complex mathematical tasks, especially those that involve rare numbers or circumstances that are not well-represented in their training data, older models frequently make mistakes on simpler math issues.

2. Fabricated Content

Sometimes, when an AI model is unable to provide an accurate response, it will create a completely made-up narrative to justify its inaccurate answer.

(Such a classic human trait. AI is picking up quickly😄)

The risk that the model will fabricate content increases with the topic's level of obscurity or unfamiliarity.

For example, if you give AI a topic it hasn't been trained on, it still gives a totally incorrect answer. That's dangerous.

Combining two facts presents another difficulty, particularly for older models, even when the model "knows" both, as the following example shows.

3. Non-Sensical Data

AI-generated output can lack genuine meaning or coherence despite appearing polished and grammatically perfect, especially when the user's cues contain contradicting information.

This occurs because, rather than actually comprehending the text they generate, language models are built to anticipate and organize words based on patterns in their training data.

As a result, the output may sound convincing and read easily, but it will ultimately make little sense because it will not be able to communicate ideas that are meaningful or logical.

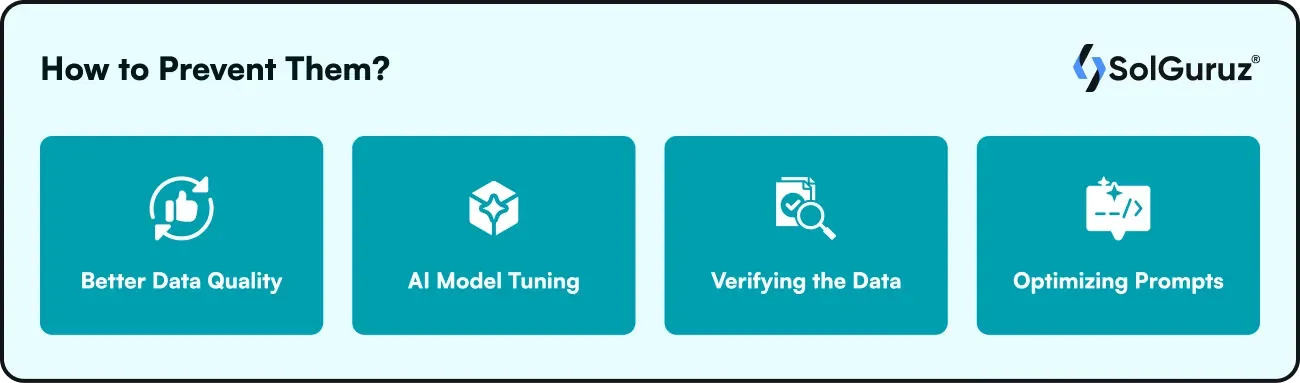

How to Prevent Them?

When there are causes for AI to hallucinate, then there are ways it can be prevented. Here are some ways –

Better Data Quality

One of the best strategies for model deployers to reduce AI hallucinations is to use high-quality training data. Deployers can lower the possibility that models will produce inaccurate or deceptive results by making sure that training datasets are representative, diversified, and devoid of major biases.

By finding gaps and filling them with more pertinent data, methods like data augmentation and active learning can help improve the quality of datasets.

AI Model Tuning

AI models must be adjusted and improved to reduce hallucinations and increase overall dependability. These procedures lessen errors, increase the relevance of results, and match a model's behavior to user expectations.

Fine-tuning is particularly useful for tailoring a general-purpose model to particular use cases, making sure it functions well in certain situations without producing inaccurate or irrelevant results.

Verifying the Data

By comparing AI-generated outputs to reliable sources or existing knowledge, human reviewers can identify mistakes, fix inaccuracies, and avert potentially dangerous outcomes.

Human review adds another level of scrutiny to the workflow, especially for applications like law or medicine, where mistakes can impact someone’s life.

Optimizing Prompts

Careful prompt design is crucial for reducing AI hallucinations from the perspective of end users. A clear and detailed prompt provides the AI model with a stronger foundation for producing relevant outcomes, whereas a vague one may result in a hallucinated or irrelevant response.

Several prompt engineering techniques can be used to improve output dependability. For instance, dividing difficult tasks into smaller, easier-to-manage processes lessens the cognitive load on the AI and lowers the possibility of mistakes.

You can read more about prompt engineering and different prompt engineering techniques to learn more.

Some Use Cases of Hallucination

Hallucination is in general a relative and subjective phenomenon, however, it has some useful applications in some places.

Creative Fields and Art

AI hallucination presents a fresh method of artistic production, giving designers, artists, and other creatives a means of producing unique and visually striking graphics. Art always asks for a fresh and creative perspective, and AI hallucinations help them.

Artists can create bizarre and dreamlike pictures that can inspire new art forms and genres thanks to artificial intelligence's hallucinogenic qualities.

Gaming Industry

Additionally, AI hallucination improves VR and game immersion. Game developers and VR designers can create new worlds that elevate the user experience by using AI models to create virtual settings and induce hallucinations. Additionally, hallucinations can provide game encounters with a sense of surprise, unpredictability, and originality.

Closing in

Hallucinations are surely a thing to resolve in AI, but surely GPT 4o is trained for “sarcasm”. This is progress. Mimicking is an advanced human action that requires great training.

Well, talking about progress, AI models are surely making progress through better training.

They are being trained to cover up like humans, using sarcasm as a shield to cover inaccurate answers or answers they don’t know.

Surely, a positive sign.

See you in the next AI Wiki!

Looking for an AI Development Partner?

SolGuruz helps you build reliable, production-ready AI solutions - from LLM apps and AI agents to end-to-end AI product development.

Strict NDA

Trusted by Startups & Enterprises Worldwide

Flexible Engagement Models

1 Week Risk-Free Trial

Give us a call now!

+1 (724) 577-7737

Next-Gen AI Development Services

As a leading AI development agency, we build intelligent, scalable solutions - from LLM apps to AI agents and automation workflows. Our AI development services help modern businesses upgrade their products, streamline operations, and launch powerful AI-driven experiences faster.

Why SolGuruz Is the #1 AI Development Company?

Most teams can build AI features. We build AI that moves your business forward.

As a trusted AI development agency, we don’t just offer AI software development services. We combine strategy, engineering, and product thinking to deliver solutions that are practical, scalable, and aligned with real business outcomes - not just hype.

Why Global Brands Choose SolGuruz as Their AI Development Company:

Business - First Approach

We always begin by understanding what you're really trying to achieve, like automating any mundane task, improving decision-making processes, or personalizing user experiences. Whatever it is, we will make sure to build an AI solution that strictly meets your business goals and not just any latest technology.

Custom AI Development (No Templates, No Generic Models)

Every business is unique, and so is its workflow, data, and challenges. That's why we don't believe in using templates or ready-made models. Instead, what we do is design your AI solution from scratch, specifically for your needs, so that you get exactly what works for your business.

Fast Delivery With Proven Engineering Processes

We know your time matters. That's why we follow a solid, well-tested delivery process. Our developers move fast and stay flexible to make changes. Moreover, we always keep you posted at every step of the AI software development process.

Senior AI Engineers & Product Experts

When you work with us, you're teaming up with experienced AI engineers, data scientists, and designers who've delivered real results across industries. And they are not just technically strong but actually know how to turn complex ideas into working products that are clean, efficient, and user-friendly.

Transparent, Reliable, and Easy Collaboration

From day one, we keep clear expectations on timelines, take feedback positively, and share regular check-ins. So that you'll always know how we are progressing and how it's going.

Have an AI idea? Let’s build your next-gen digital solution together.

Whether you’re modernizing a legacy system or launching a new AI-powered product, our AI engineers and product team help you design, develop, and deploy solutions that deliver real business value.